WavebreakmediaMicro - Fotolia

How to form a regression testing plan with these 5 questions

Make regression testing part of a software quality control plan. Determine the project's testing requirements, and team preferences, to clarify a potent regression testing strategy.

When you put together a software test plan, incorporate regression tests, which confirm that code changes don't adversely affect the existing program functionality.

Regression testing helps teams manage the risks of change. QA executes regression tests in a variety of ways. They can repeat the exact same test as before the change occurred, reuse the prior test idea, or use different data and secondary conditions altogether as varying items across different uses of the test.

Regression testing includes both functional testing and nonfunctional testing. Functional regression tests ensure that the software works as intended. Nonfunctional software tests evaluate whether changes lead to performance degradation or security issues.

In many cases, the regression test plan can and should include test automation. Regression tests repeat each time code changes, which means they execute constantly. Automated regression tests can quickly evaluate much of the software's functionality, which enables QA professionals to evaluate edge cases via exploratory testing. QA teams should find a mix of both automated and manual tests to suit the regression testing plan, and apply the proper tools for each approach.

To conduct manual tests, consider visual regression testing, in which tools evaluate web applications from the end user's perspective. This process can reveal when visual elements, such as images or text, overlap, as well as whether responsive design works as expected. Additionally, emulators and real devices provide a means of regression testing mobile apps from the user perspective.

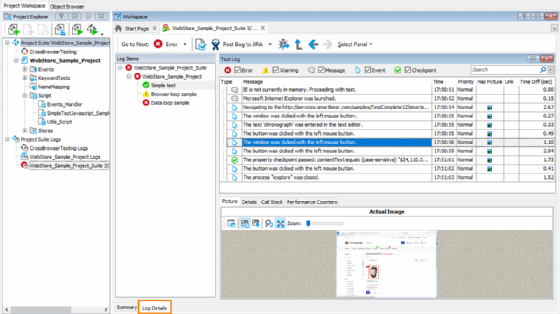

Regression testing tools

Editor's note: SearchSoftwareQuality editors compiled this sampling of the regression testing market based on software industry adoption and interest in various tools in 2019.

Testers can use a variety of testing tools to execute, manage and review regression tests. These tools range from full-featured vendor products to open source options.

Some popular regression testing tools include:

- Selenium, an open source tool that helps teams build cross-environment automated regression test suites;

- Sahi Pro, a licensed option for automated regression tests against a variety of applications, plus record and playback tools for browsers;

- Watir, which stands for Web Application Testing in Ruby, provides a lightweight, open source product for automated regression tests against web apps and browser interaction capabilities;

- SmartBear TestComplete, a licensed tool with regression test features for automation, database testing, test recording and custom extensions; and

- IBM Rational Functional Tester, another licensed automated testing tool that can evaluate a variety of web- and emulator-based apps, and features visual editing and integration with other IBM products.

Before choosing a testing tool, however, verify that the tool fits the organization's specific requirements. QA must be able to maintain the tests and tool over the long term to keep it in alignment with the regression testing plan.

Define a regression testing plan

When writing regression tests into a formal test plan, there are many factors to consider. Here are some questions to help facilitate an effective regression testing strategy.

- What's the goal of the testing? Understand how much coverage you need for the given project. Define this as clearly as possible. When the team encounters confusion around the goal of the regression testing plan, that's when these tests become expensive and ineffective.

- What kind of coverage does the software team want from its regression testing? Coverage is hard to track in regression tests. Often, one test covers multiple risks or areas of the application. Understand coverage to communicate how your regression tests affect other project stakeholders.

- What techniques will we use to execute and maintain the tests? How will you apply test automation within the regression testing plan? Which tests must execute manually, and who will perform them? What skills must manual testers know, and what areas of the application? What testing tools will we need for automated tasks, and what infrastructure? How will we maintain tests over time? These questions will not only help refine a regression testing plan, but also shed light on how the team can perform test automation in conjunction with continuous integration.

- What environment(s) will we need to execute tests? Consider what data you need to feed the application for these tests, as well as any custom configurations to be deployed. Keep in mind that some tests might need to be executed against different configurations of the program, and that you must have a plan to manage that.

- How will we report the status of the testing? Define how testers will report results, including the level of detail, priorities and obstacles for tests. As teams come to rely more on test automation and continuous testing, they must keep tests running efficiently and eliminate flaky tests whenever possible.

Alan Richardson of EvilTester explains regression testing.

The specifics of the regression testing plan format don't matter as long as you have the right content from these five questions. Format the information using the same style and tone as the test plan template you're using. If you don't use a template, brainstorm ways to structure the information in a way that clarifies your testing strategy for the whole team, and get feedback on how well the presentation works.